- cross-posted to:

- proxmox@lemmy.world

- cross-posted to:

- proxmox@lemmy.world

I have love/hate relationship with Proxmox Backup Server (PBS). It’s a groundbreaking product, cutting edge technology, entirely free, and a complete pain in the ass. Because of all of the above, I’ve spent a good deal of time haunting the user forum, irritating the staff, and spreading unpleasant truths about PBS.

So, what’s amazing about Proxmox Backup Server? It’s the realized dream of complete dedupe. There are a couple similar products out there, but largely whatever you think you know about backups probably only barely applies to PBS.

PBS should be deployed on SSD, because its really a huge database of file chunks. (Literally, they are called .chunks.) PBS scans its .chunks to see if it already has a .chunk, and if it does, it is not copied. Absolute dedupe, every time. A backup job consists of a whole lot of reading and calculating, but as little writing as possible. The actual backup itself, such as it exists, is a set of metadata that says which .chunks will be needed to build the VM files. When a backup is deleted, the metadata is deleted. If the .chunks that the metadata pointed at are not used by any other backup and continue to go untouched for a couple days, they are purged by garbage collection.

Think about how this will work out. When you delete a backup you are just deleting your ability to assemble those .chunks into a disk file, essentially nothing happens to the data on the back end for 2 days. If you immediately ran another backup, it’s_going_to_run_like_an_incremental. Because all the data is there still. So the only thing it will write is a new metadata set (aka “backup”) and any delta that’s occurred on the vm since last backup. This is completely different from any sort of backup data management you’ve done before.

When a backup runs in Proxmox and the backup target is a PBS server, the VM is NOT stunned for a snapshot. The ‘stun and snapshot’ tactic is present in almost every virtual backup system out there in order to get the VM running on a delta disk, leaving the main disk available to be scanned for backup. PBS simply does not do this.

What PBS does do instead of a snapshot is hard to describe, but in pursuing their goal of not having a VM stun, they introduced a far worse flaw. The VM can hang on write if the sector to be written is also being backed up at that moment, lockstep with the storage write until that chunk of data is done backing up. Because of this issue, they introduced another feature that is essentially write caching, but they call it fleecing. (I’m told the name fleecing is part of the qemu standard.) Fleecing brought its own game-killer bugs to the table, only partially fixed in the latest version.

PBS does some really cool site to site sync tricks. You can establish a Remote relationship, and then a Sync Job. It’s a nerdy interface, and not at all user friendly, but you can tell it exactly what to sync. They recently added Push style jobs, which will feel more similar to common backup systems than the original Pull jobs. When you start doing site to site sync and your VM backup populations get mixed, you immediately discover the need for Namespaces to segregate them, and that can be an intricate rabbit hole.

I just noticed their site blurb says PBS does physical hosts, which isn’t exactly a lie, but pretty close. PBS is for backing up Proxmox guests.

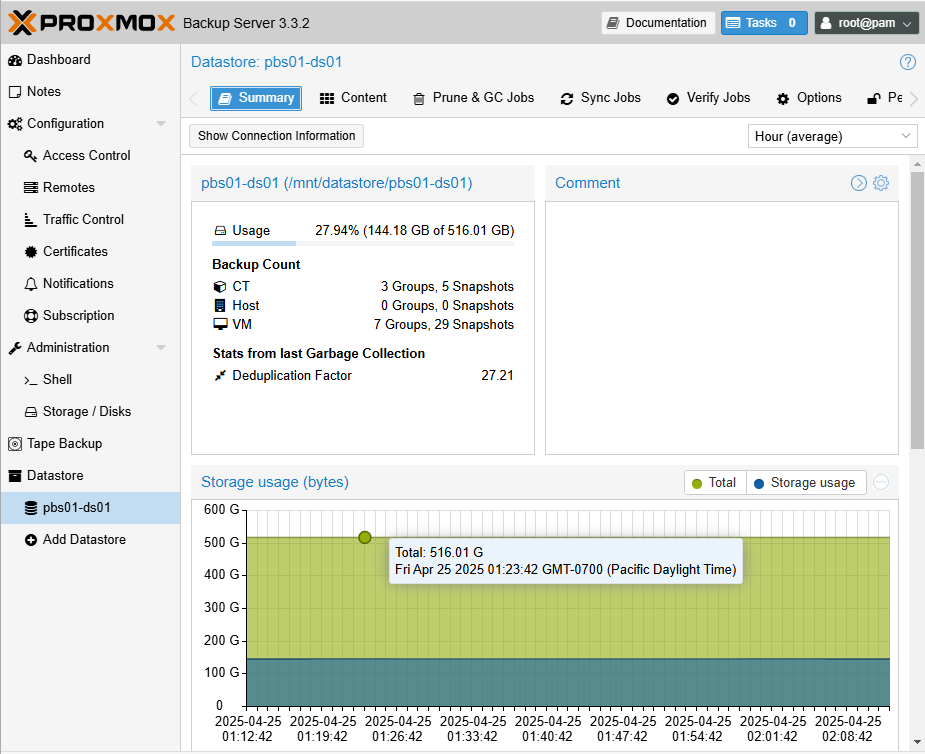

If you run virtual machines professionally, you should know about Proxmox and have at least tried it. If you use Proxmox with any regularity, you should check out PBS. Here’s a shot of my homelab PBS. Note the dedupe factor.

___

___

Have you noticed any slowness using PBS? I use it to back up my VMs and the backups seem fine, but whenever I go to list the backups - it’s extremely slow and times out sometimes.

I do have my datastore mounted over NFS though so I’m assuming that might be part of my problem.

Sorry about that. Yes, NFS is all of the problem. NFS/SMB are the worst way to mount PBS storage by at least an order of magnitude.

You can search the forum for a script published by DerHarry to test the .chunks system and prove it for yourself if you like. I used his script. His results are definitive. NFS mounted in the OS as a PBS datastore is not good. (I adapted the script for testing PBS performance before/after adding a SSD special vdev to a zfs pool of spinners.)

Using even that same hardware, there are a couple other options that work better.

Attach the NFS to the PVE host and use it as a datastore. Create a large qcow2 disk there as your data disk. I know, this is the same thing, right? No. It works better. (I’m doing this in some locations. Not great, but better than direct NFS access.)

Use ISCSi and not NFS. Its faster, better designed for this use. (YMMV. I’m doing this in one location, gonna roll it back in another location.)

Depending on the NAS resources, perhaps you can run the PBS as a guest of the NAS itself. Sitting right on top of the data instead of network access is a huge advantage. (I do this everywhere the NAS has the resources to run a guest.)

Der Harry’s post … https://forum.proxmox.com/threads/datastore-performance-tester-for-pbs.148694/