I though I’d better make a post about recent lemmy.world federation issues, since not everyone sees the daily posts.

TL;DR:

- Lemmy.world inbound federation is about 18 hours behind, and slowly catching up.

- Outbound is fine: If you post or comment on something in a lemmy.world community, it will be almost instantly federated to other users, but if they reply to you then it will take 18 hours before you see it.

- It was caused by an assumed Kbin bug in combination with a lemmy bug

- All other instances seem fine for inbound and outbound federation

On Thursday last week Lemmy.world began to receive hundreds of thousands of “activities” (actions - comment, post, upvote, etc) from Kbin to their communities. These actions then federate from Lemmy.world out to any instance with users subscribed to those communities.

Unfortunately, this also helped uncover a big issue with how Lemmy handles inbound federation. Actions are sent one at a time, and the next can’t be sent until the last is done. This means the number of activities your server can receive depends on the latency between the sending and receiving server. In our case, the fact we are hosted in Auckland and Lemmy.world is hosted in Finland means that we can receive about 4 or 5 inbound activities from them per second.

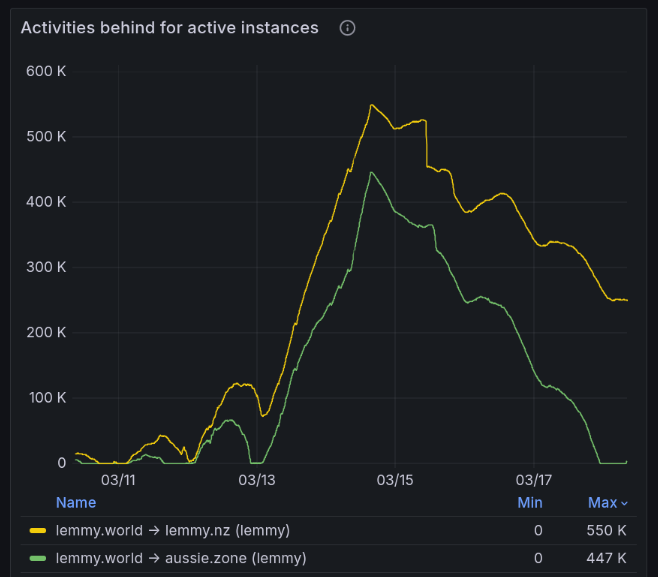

When the Kbin issue happened, suddenly Lemmy.world had hundreds of thousands of activities to send to us in addition to the normal inflow of content. We got way behind in terms of Lemmy.world content coming to us. At the peak, we were around 28 hours behind, about 550k activities behind. We are now sitting at around 19 hours behind, and will likely remain that way until this afternoon, when it should improve some more (it’s now peak time on the other side of the world).

Here is a graph of the progress. Aussie.zone has a similar problem to us, but is closer to Europe where lemmy.world is hosted so the latency issue is less pronounced. We are one of the worst affected instances due to how it’s hard to get further away than where we are now.

As you can see, with Aussie.zone being a bit closer, they were hit hard but not as hard as us, and have now managed to recover. We are still probably days away from being back in near real time federation with lemmy.world, but each day does seem to improve quite a bit.

Other than picking up the server and moving it to Europe, there doesn’t seem to be a lot we can do except wait and hope the lemmy bug is fixed before lemmy.world grows so large that users are creating content faster than we can receive it.

There have been some suggestions for short term band-aids. One is that we could set up a proxy in Europe, and filter out certain traffic. For example, we could prevent downvotes being sent to reduce the amount of traffic coming our way (since everything is sent one at a time, as per the ActivityPub protocol). I’m not super keen on doing anything that gets us out of sync with the others, though.

We could of course move hosting to Europe, but that gets a lot more expensive (since we’d have to pay for it instead of being free-loaders) and also means the site would feel more sluggish, since then that latency would be for users on every page load instead of the behind the scenes federation.

For now we are slowly getting back in sync with lemmy.world, and as long as there aren’t more Kbin bugs then we should be ok for now. Unfortunately doing things like blocking Kbin.social doesn’t seem to help, as lemmy.world still tries to send us the content from Kbin.social users. i.e. it’s rejected by our server, not prevented from being sent, so it still takes a slot.

Any questions, suggestions, or concerns, reply to this post!