DEF CON Infosec super-band the Cult of the Dead Cow has released Veilid (pronounced vay-lid), an open source project applications can use to connect up clients and transfer information in a peer-to-peer decentralized manner.

The idea being here that apps – mobile, desktop, web, and headless – can find and talk to each other across the internet privately and securely without having to go through centralized and often corporate-owned systems. Veilid provides code for app developers to drop into their software so that their clients can join and communicate in a peer-to-peer community.

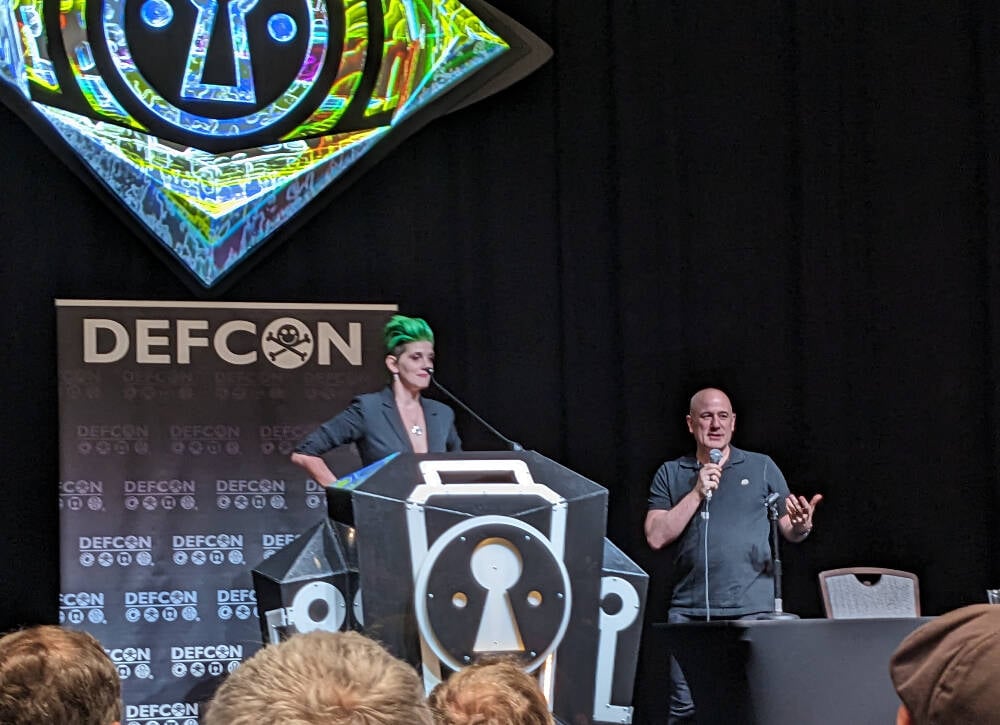

In a DEF CON presentation today, Katelyn “medus4” Bowden and Christien “DilDog” Rioux ran through the technical details of the project, which has apparently taken three years to develop.

The system, written primarily in Rust with some Dart and Python, takes aspects of the Tor anonymizing service and the peer-to-peer InterPlanetary File System (IPFS). If an app on one device connects to an app on another via Veilid, it shouldn’t be possible for either client to know the other’s IP address or location from that connectivity, which is good for privacy, for instance. The app makers can’t get that info, either.

Veilid’s design is documented here, and its source code is here, available under the Mozilla Public License Version 2.0.

“IPFS was not designed with privacy in mind,” Rioux told the DEF CON crowd. “Tor was, but it wasn’t built with performance in mind. And when the NSA runs 100 [Tor] exit nodes, it can fail.”

Unlike Tor, Veilid doesn’t run exit nodes. Each node in the Veilid network is equal, and if the NSA wanted to snoop on Veilid users like it does on Tor users, the Feds would have to monitor the entire network, which hopefully won’t be feasible, even for the No Such Agency. Rioux described it as “like Tor and IPFS had sex and produced this thing.”

“The possibilities here are endless,” added Bowden. “All apps are equal, we’re only as strong as the weakest node and every node is equal. We hope everyone will build on it.”

Each copy of an app using the core Veilid library acts as a network node, it can communicate with other nodes, and uses a 256-bit public key as an ID number. There are no special nodes, and there’s no single point of failure. The project supports Linux, macOS, Windows, Android, iOS, and web apps.

Veilid can talk over UDP and TCP, and connections are authenticated, timestamped, strongly end-to-end encrypted, and digitally signed to prevent eavesdropping, tampering, and impersonation. The cryptography involved has been dubbed VLD0, and uses established algorithms since the project didn’t want to risk introducing weaknesses from “rolling its own,” Rioux said.

This means XChaCha20-Poly1305 for encryption, Elliptic curve25519 for public-private-key authentication and signing, x25519 for DH key exchange, BLAKE3 for cryptographic hashing, and Argon2 for password hash generation. These could be switched out for stronger mechanisms if necessary in future.

Files written to local storage by Veilid are fully encrypted, and encrypted table store APIs are available for developers. Keys for encrypting device data can be password protected.

“The system means there’s no IP address, no tracking, no data collection, and no tracking – that’s the biggest way that people are monetizing your internet use,” Bowden said.

“Billionaires are trying to monetize those connections, and a lot of people are falling for that. We have to make sure this is available,” Bowden continued. The hope is that applications will include Veilid and use it to communicate, so that users can benefit from the network without knowing all the above technical stuff: it should just work for them.

To demonstrate the capabilities of the system, the team built a Veilid-based secure instant-messaging app along the lines of Signal called VeilidChat, using the Flutter framework. Many more apps are needed.

If it takes off in a big way, Veilid could put a big hole in the surveillance capitalism economy. It’s been tried before with mixed or poor results, though the Cult has a reputation for getting stuff done right. ®

While the pirate in me says “hell yeah!”, the system administrator in me says “Fuuuuuuuck”. I was once part of an IRC network, and one of the biggest issue we had was with Brazilians who would break our rules and get banned. Just a minute or two later, they were back. It got so bad that we just said “Fuck it. We’re banning all of Brazil.” Not an ideal solution, but it beats spending our time chasing the majority offenders. It’s the 80/20 rule, where 80% of your problems are caused by 20% of your users.

Now let’s pretend somebody builds their new app around this new tech. I love the concept, but how do you keep order? How do you ensure people follow the rules? The only thing keeping users in line would be the fear of losing their “brand” (their username, their reputation). If the new app is something like a chatroom, there’s no “brand” to be had, and you can simply use a new name. It would, obviosly be very different if the app were based around file hosting like Google Drive, because you don’t want to lose your files, but anything with low retention will likely be rife with misconduct due to anonymity.

On the other hand, it would allow for a completely open internet, that no single government can shut down, which we’re seeing happening more and more, with China, Iran, Russia, and Myanmar all shutting down the Internet, or portions of it, when those in power feel there’s a threat to the status quo.

One possibility is to allow users to join a controlled allowlist (or a blocklist, though that runs more into that problem), where some actor acts as a trust authority (which the user picks). This keeps the P2P model while still allowing for large networks since every individual doesn’t have to be a “server admin”. A user could also pick several trust authorities.

Essentially, the network would act as a framework for “centralized” groups, while identity remains completely its own.

This is solving a non-problem. Yeah stupid script kiddies and trolls might care but that is noise easily blocked. The actual people causing harm, committing massive crimes that flood the system with government, or causing massive DoS attacks, none of them care or even want to have something they could lose on a system like this. Its better to be anonymous and not have a brand.

Look at what happens here in the Fediverse. People take time to exclude the havens of the problematic and that resolves enough of the issue to make the services work. But that means that someone is making decisions, and that someone can be targeted to take down a site or to not defederate even when the community thinks its best. There is still a human involved that can be bought or beaten.

The only way to make a system where people follow the rules is to make a place where people dont care to break them. Rules give those who follow them justification for punishing those who don’t. They don’t actually stop people from breaking them.

I think if this system can be hardened against attacks and its easy to deal with spam then we all just coexist with the shit that happens in the background we don’t see.

I can’t imagine a successful, open social network based on this. The entire value of social networks is the moderation (in the same way any bar or club keeps certain people out, via rules, signals, or obscurity, and allows likeminded people to relax and socialize).

I love that this project exists for other use cases. And I could see invite-only, small social networks forming. I just don’t think you’d want to build a Twitter or Reddit clone using it.

I mean, people can already use VPNs or whatever to circumvent protocol-level blocks. You prevent that with usernames or email verification or some equivalent, and there’s no reason you wouldn’t just keep doing that in these new apps.

True. Regardless of nationality, background, or interests, moderation will always be a “problem” in these platforms. Sadly the same tool that can target these obvious spammers can be used to silence honest minorities, and the boundary between these groups is also not set in stone.

I believe financial consequences can be very useful to make it expensive to spam or be abusive.

For example, for a user to access an app:

The user Reputation and distribution of fines:

That’s a very interesting approach, and may work for very specific applications. It seems unlikely most platforms or apps would adopt such an approach since it would be a very high burden to overcome for users to sign up, which is what let’s an app or platform grow. People are rather attached to money, but there’s also the other side of this. A user may not care about the fine and continue to break the rules as “the cost of doing business” because they’re wealthy.

It’s definitely going to be a technology that drives anonymity, and everything that comes with it, for better or worse. I can see a lot of good this can do, but also a lot of crime it can facilitate.

I agree. There is a potential barrier to entry, and growth. I argue:

The first point is the most important IMHO, privacy users accept the learning curve and inconvenience because they believe privacy is more important and because of this, I believe the burden is not as high as we think, that a ‘free to play’ alternative means of accessing privacy respecting apps (by this idea or something else) is as as essential to supporting and protecting privacy as E2EE vs server side encryption.

Hey, that were exavyomy thoughts after reading it. Let’s speak more clear.

A group use the protocol to setup a Plattform to distribute illegal stuff, child porn, drug marketplace, Warez…

Sure it might be the “bad protocol” I news and people / industry is trying to blame and block it. But because of the decentralized structuring like the onion net it is hard to.

Another aspect which should be thought about is government in repressive countries. Iran, North Korea, China, Russia… Name it.

You could block the protocol but how could it be by design setup to make it actually hard for NGFW to just recognize the package by header and filter it. Didn’t check in detail but hope the implement some mechanics to make this hard.